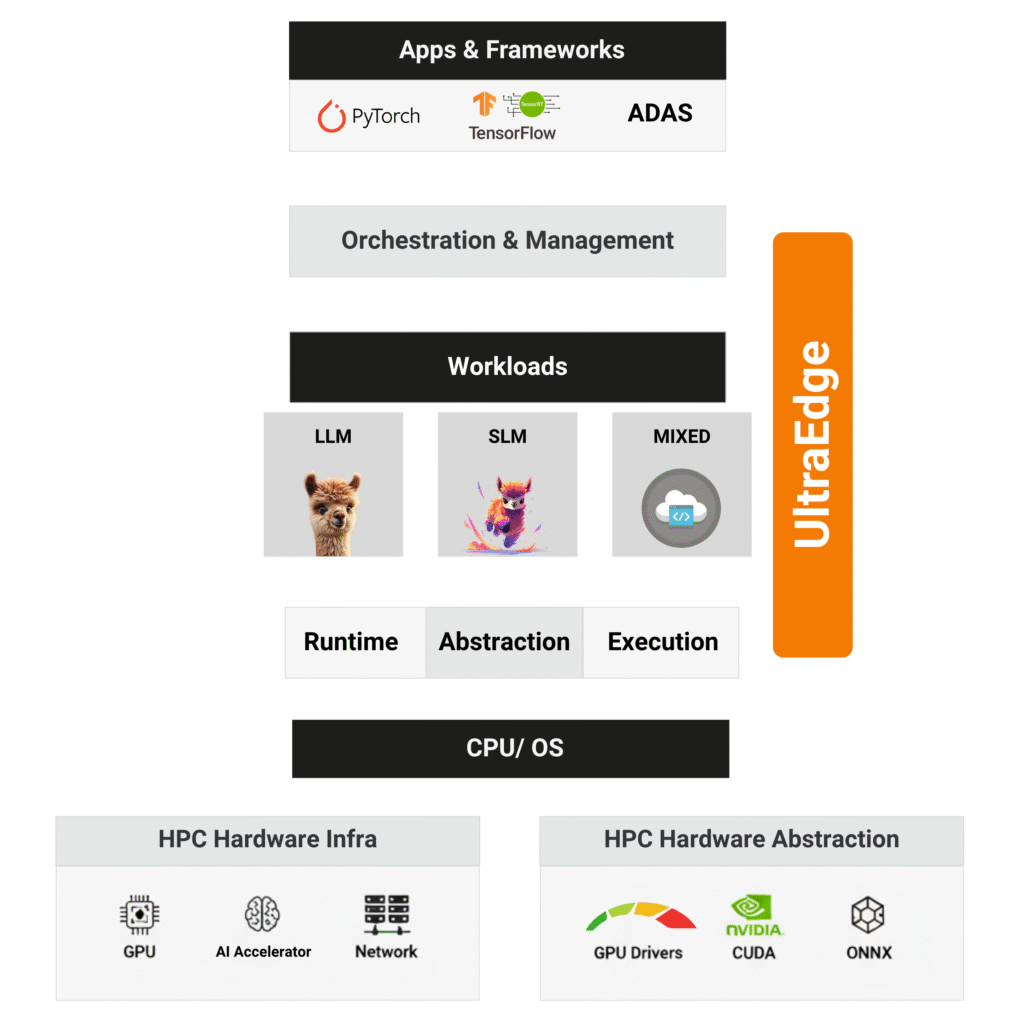

UltraEdge: The Execution Fabric for AI & Mixed Workloads

UltraEdge is a lean, high-performance modular execution layer that runs on CPUs and HPCs while orchestrating GPUs, NPUs, and custom accelerators — delivering deterministic performance for AI and mission-critical workloads.

UltraEdge anchors execution on CPUs while orchestrating GPUs and accelerators — turning fragmented hardware into a unified high-performance layer.

“UltraEdge in action — packaging and executing workloads instantly with the MicroPac CLI.”

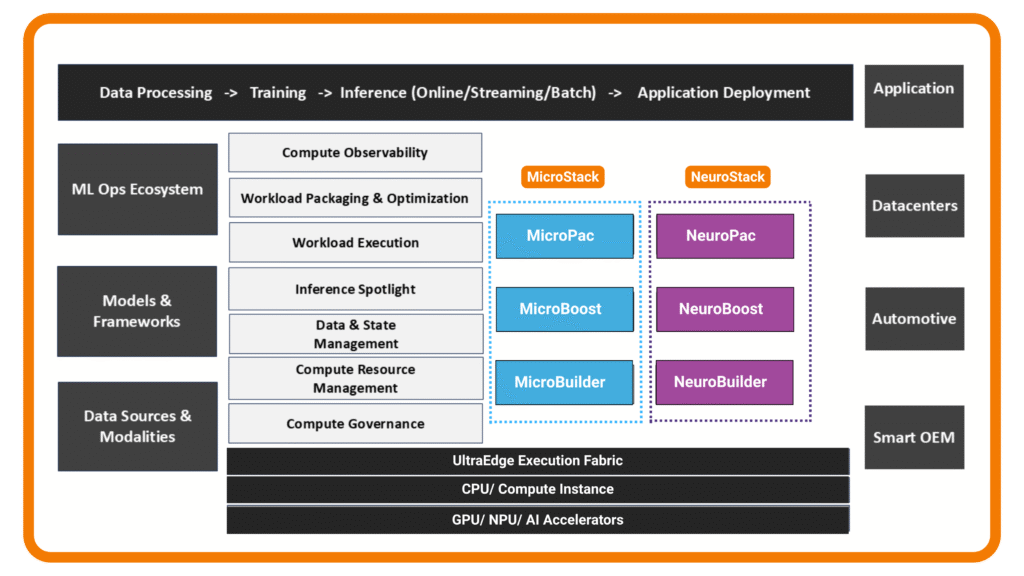

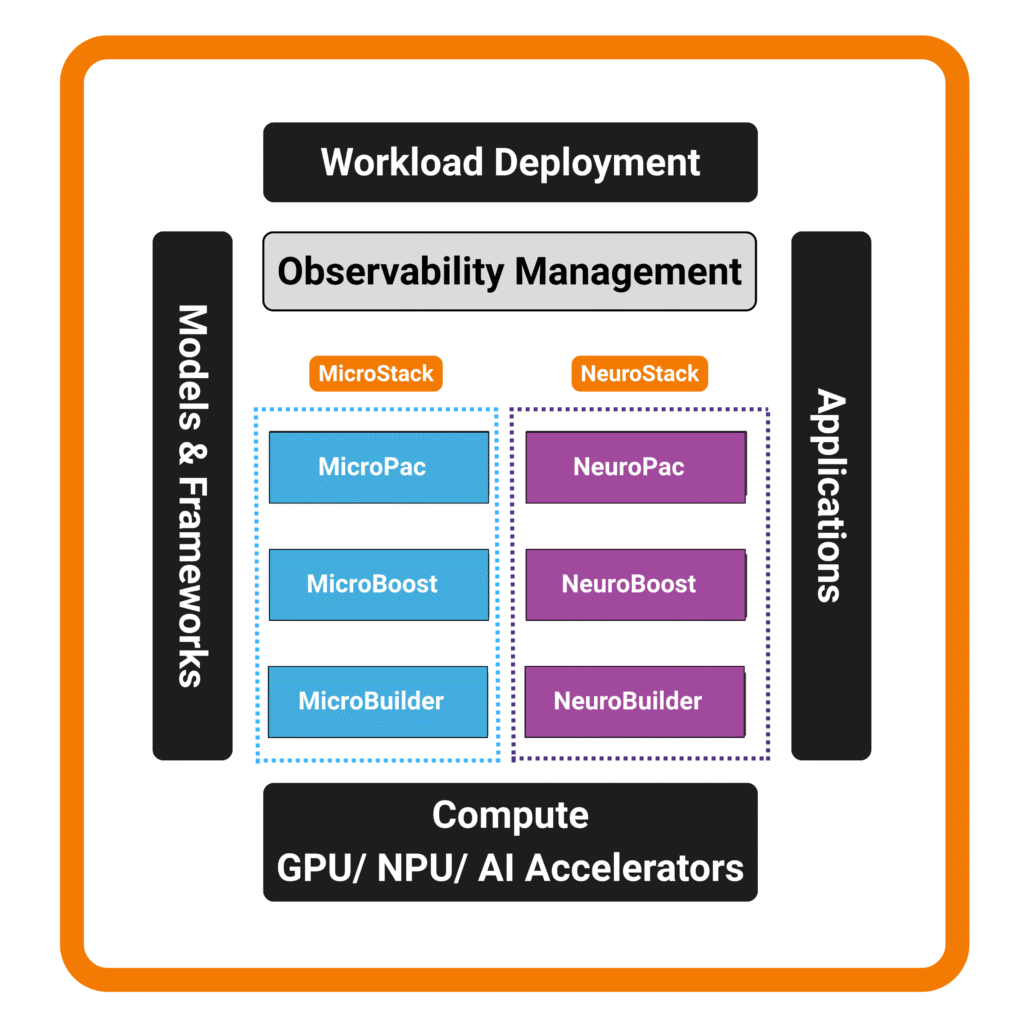

The Functional Architecture of UltraEdge

UltraEdge delivers its capabilities through modular Stacks. Each Stack combines packaging, runtimes, and developer tooling - optimized for its workload type but unified inside one execution fabric.

Our Unique Value

UltraEdge is built from first principles — not as a rework of legacy execution stacks, but as a clean execution fabric purpose-built for AI, mixed workloads on HPCs.

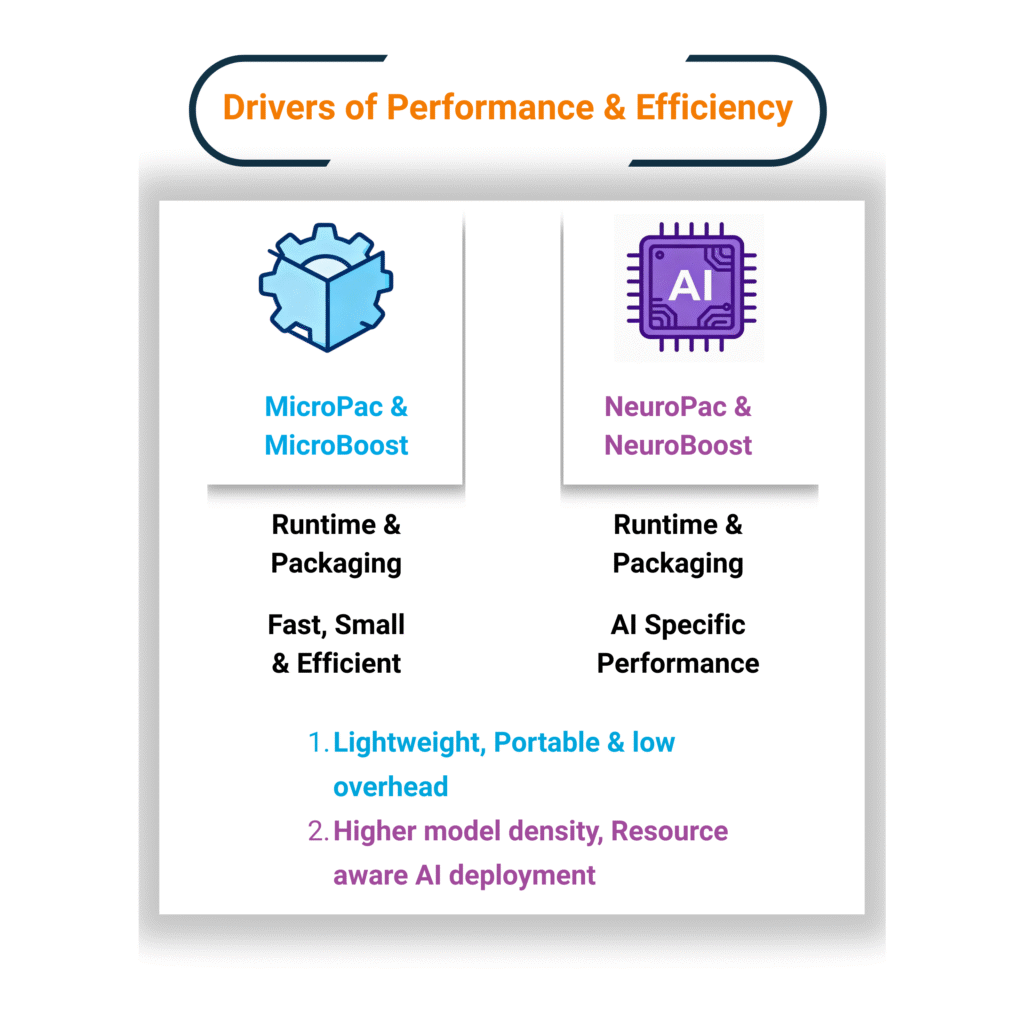

Reinvented Execution

Powered by MicroBoost + MicroPac , NeuroBoost + NeuroPac — a lean runtime and packaging system that eliminates execution bloat.

AI-Native Performance

Driven by NeuroBoost + NeuroPac — purpose-built for real-time inference and large-scale AI training.

Energy Efficiency at Scale

Enabled by UltraEdge’s lean stacks — delivering higher performance per watt and lower energy draw in HPC deployments.

Unified Orchestration

Integrated through UnCloud + policy controls — unifying AI and mission workloads across CPUs, GPUs, NPUs, and accelerators.

Designed by Practitioners

Shaped by real HPC + AI experience — built by a team that lived the execution problem before solving it.

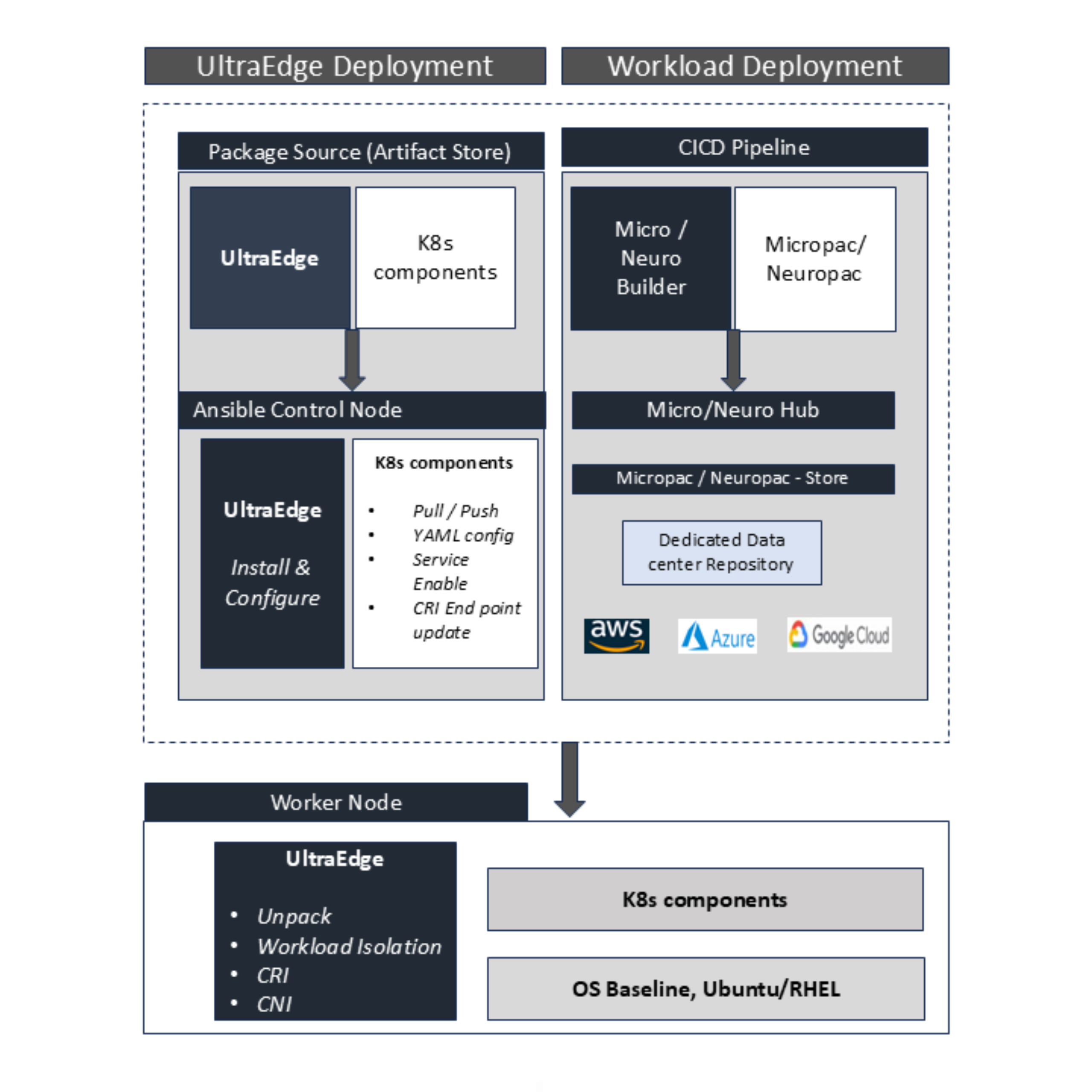

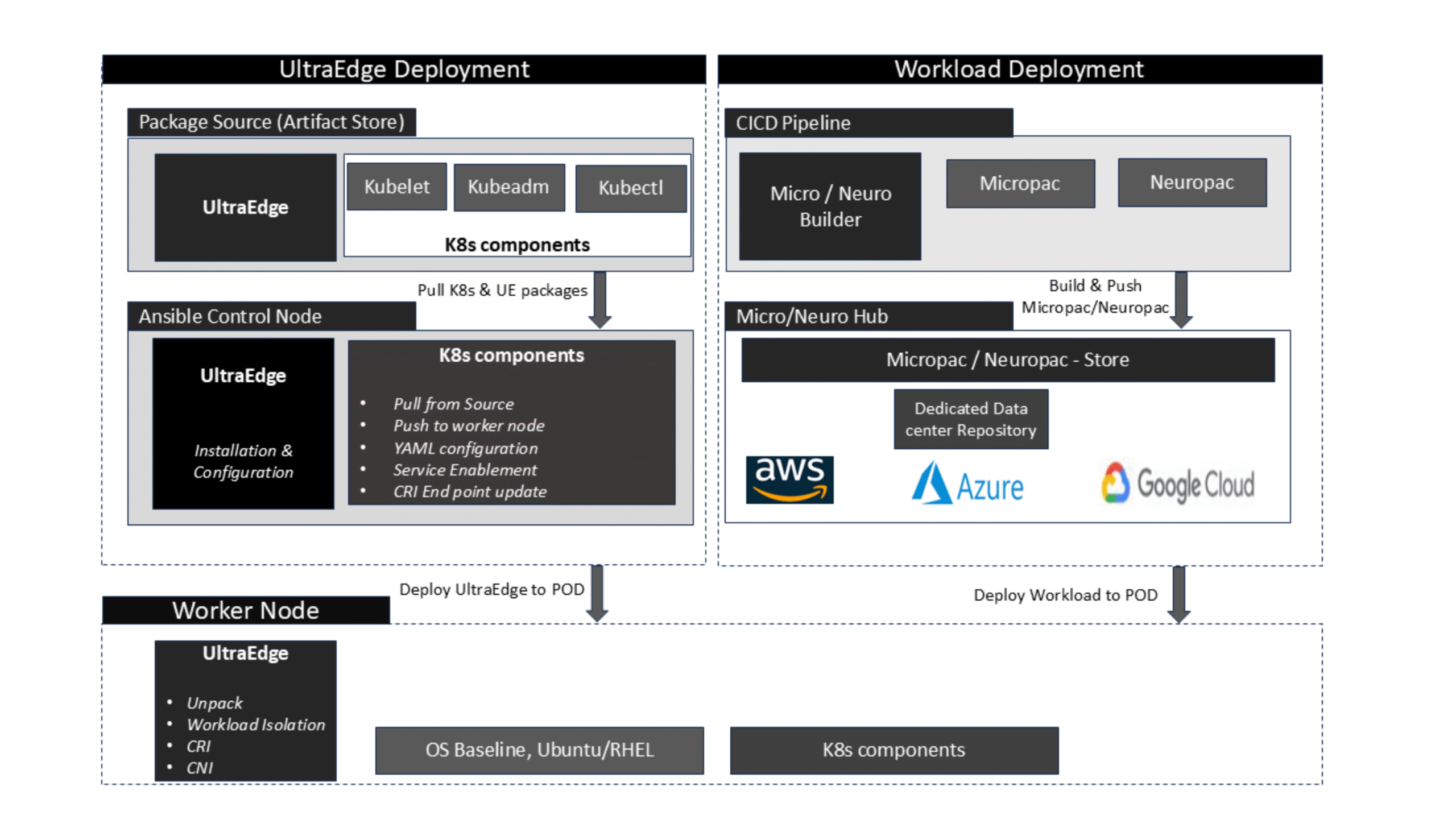

Deploy, Execute, and Scale with Confidence

UltraEdge integrates seamlessly into enterprise and hyperscale environments, enabling deterministic execution and fleet-wide scalability.

Deploy workloads deterministically, execute them at peak efficiency, and scale seamlessly across heterogeneous compute.

Optimize your workloads. Maximize your performance.

Let’s discuss how TinkerBloX can streamline your AI and mixed workload execution — from training clusters to real-time inference.